Microsoft Unveils Phi-4

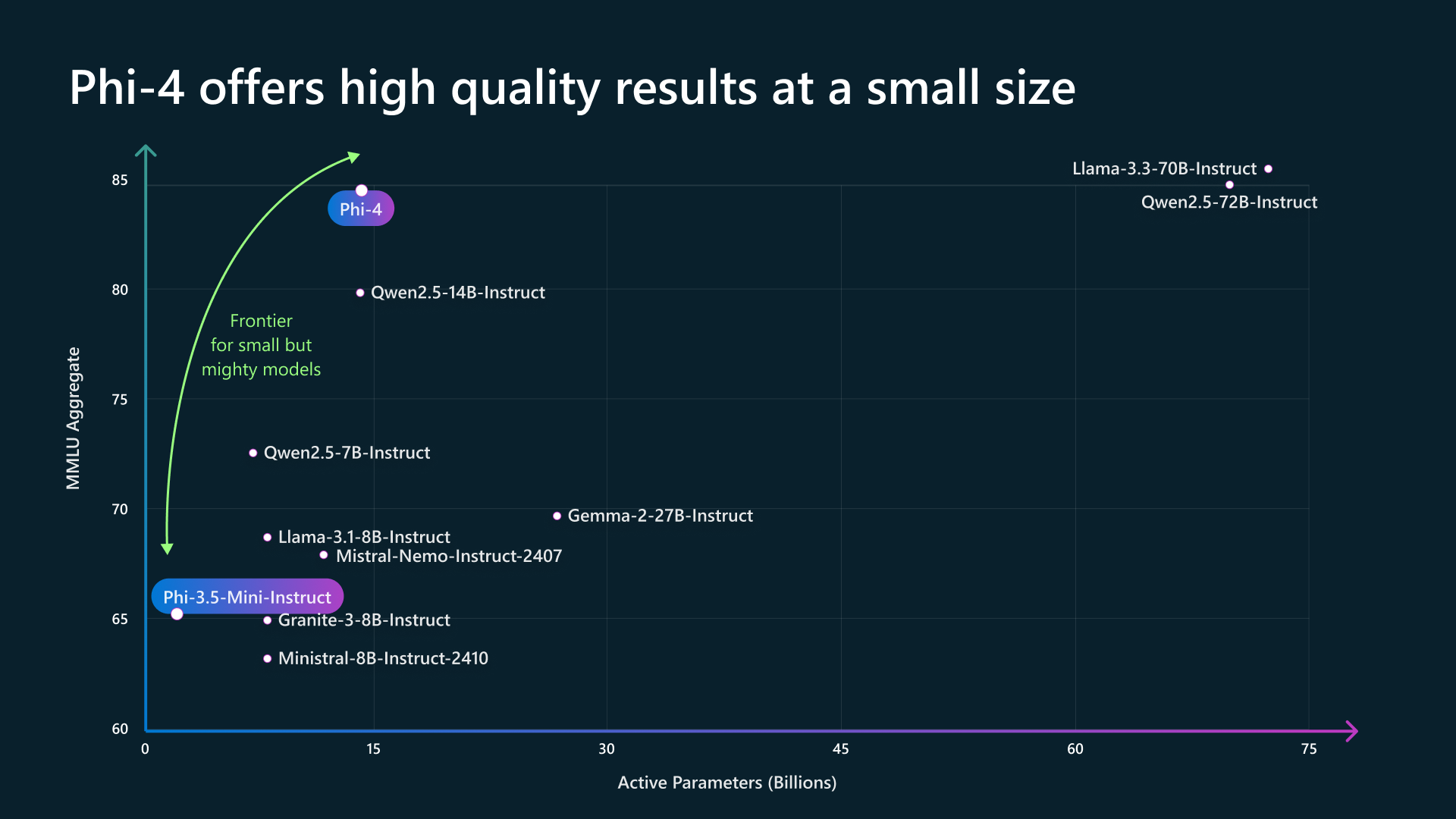

In a surprising turn of events that’s challenging conventional wisdom in artificial intelligence, Microsoft has unveiled Phi-4, a compact 14 billion parameter language model that’s making waves in the AI community. While the industry has been fixated on building increasingly larger models, Phi-4 demonstrates that bigger isn’t always better.

Breaking the Scale Barrier

The most remarkable aspect of Phi-4 is its ability to outperform substantially larger language models, including GPT-4 and Gemini Pro 1.5, particularly in mathematical reasoning and graduate-level STEM questions. This achievement is especially noteworthy given that Phi-4 operates with just a fraction of the parameters of its competitors.

The Secret Sauce: Quality Over Quantity

Microsoft’s approach to training Phi-4 represents a significant departure from traditional methods. Instead of relying solely on vast amounts of internet-scraped data, the team utilized 400 billion tokens of synthetic data, carefully generated and validated by AI systems. This focus on quality over quantity appears to be a key factor in Phi-4’s exceptional performance.

Technical Improvements and Capabilities

The model brings several technical advancements to the table:

- A doubled context window of 4,000 tokens compared to its predecessor

- Enhanced efficiency in processing and deployment

- Specialized capabilities in mathematical reasoning and STEM-related tasks

Industry Implications

This development could mark a paradigm shift in AI model development:

- Resource Efficiency: Smaller models require less computational power and energy, making AI more sustainable and accessible.

- Specialized Excellence: Phi-4 demonstrates that focused training can yield better results than general-purpose large models in specific domains.

- Cost-Effective Deployment: The model’s smaller size could translate to reduced operational costs for businesses implementing AI solutions.

Looking Ahead

As Phi-4 moves from research preview to broader availability through Azure AI Foundry and eventually Hugging Face, its impact could reshape how we think about AI model development. The success of Phi-4 suggests that future breakthroughs might come from smarter architecture and training approaches rather than simply scaling up model size.

What This Means for the Future

This development signals a potential new direction in AI research where efficiency and specialized capability take precedence over raw size. It could lead to more sustainable AI development and wider adoption of AI technologies across industries previously limited by resource constraints.

Would you like me to expand on any particular aspect of this analysis or explore specific implications of Phi-4’s capabilities?